Run this tutorial

Click here to run this tutorial on binder:Tutorial: Interpolatory model order reduction for LTI systems¶

Here we discuss interpolatory model order reduction methods (also known as: moment matching, Krylov subspace methods, and Padé approximation) for LTI systems. In Tutorial: Linear time-invariant systems, we discussed transfer functions of LTI systems, which give a frequency-domain description of the input-output mapping and are involved in system norms. Therefore, a reasonable approach to approximating a given LTI system is to build a reduced-order model such that its transfer function interpolates the full-order transfer function.

We start with simpler approaches (higher-order interpolation at single point [Gri97]) and then move on to bitangential Hermite interpolation which is directly supported in pyMOR.

We demonstrate them on Penzl’s FOM example.

from pymor.models.examples import penzl_example

fom = penzl_example()

print(fom)

LTIModel

class: LTIModel

number of equations: 1006

number of inputs: 1

number of outputs: 1

continuous-time

linear time-invariant

solution_space: NumpyVectorSpace(1006)

Interpolation at infinity¶

Given an LTI system

the most straightforward interpolatory method is using Krylov subspaces

to perform a (Petrov-)Galerkin projection. This will achieve interpolation of the first \(2 r\) moments at infinity of the transfer function. The moments at infinity, also called Markov parameters, are the coefficients in the Laurent series expansion:

The moments at infinity are thus

A reduced-order model

where

with \(V\) and \(W\) as above, satisfies

We can compute \(V\) and \(W\) using the

arnoldi function.

from pymor.algorithms.krylov import arnoldi

r_max = 5

V = arnoldi(fom.A, fom.E, fom.B.as_range_array(), r_max)

W = arnoldi(fom.A.H, fom.E.H, fom.C.as_source_array(), r_max)

We then use those to perform a Petrov-Galerkin projection.

from pymor.reductors.basic import LTIPGReductor

pg = LTIPGReductor(fom, W, V)

roms_inf = [pg.reduce(i + 1) for i in range(r_max)]

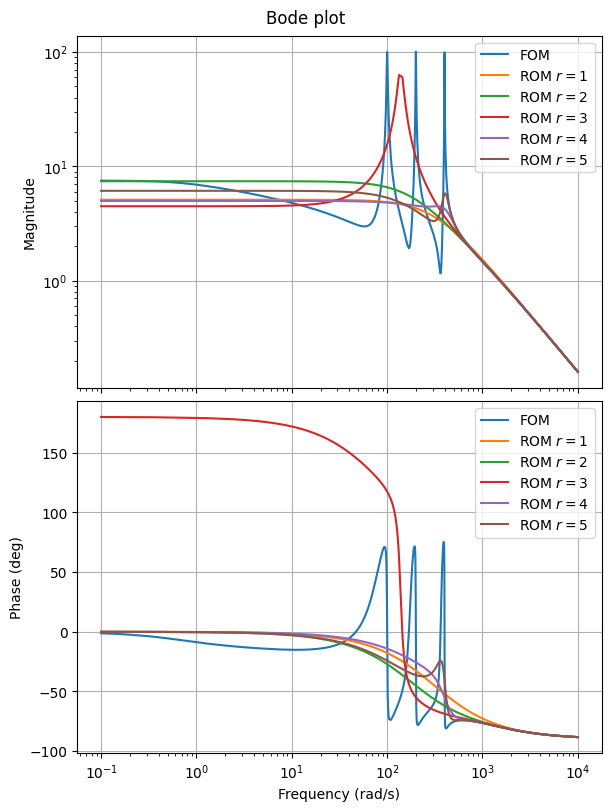

To compare, we first draw the Bode plots.

import matplotlib.pyplot as plt

plt.rcParams['axes.grid'] = True

w = (1e-1, 1e4)

bode_plot_opts = dict(sharex=True, squeeze=False, figsize=(6, 8), constrained_layout=True)

fig, ax = plt.subplots(2, 1, **bode_plot_opts)

fom.transfer_function.bode_plot(w, ax=ax, label='FOM')

for rom in roms_inf:

rom.transfer_function.bode_plot(w, ax=ax, label=f'ROM $r = {rom.order}$')

_ = ax[0, 0].legend()

_ = ax[1, 0].legend()

As expected, we see good approximation for higher frequencies. Drawing the magnitude of the error makes it clearer.

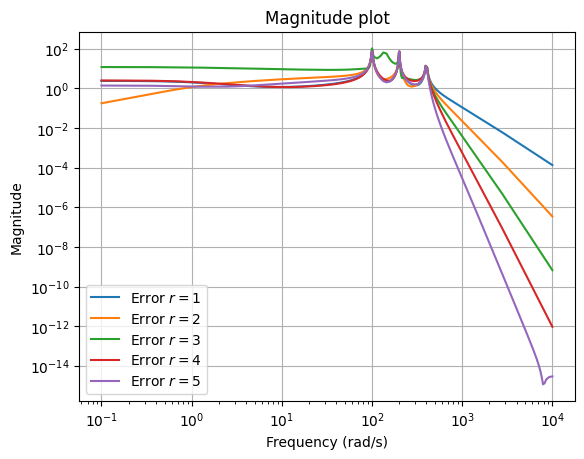

fig, ax = plt.subplots()

for rom in roms_inf:

err = fom - rom

err.transfer_function.mag_plot(w, ax=ax, label=f'Error $r = {rom.order}$')

_ = ax.legend()

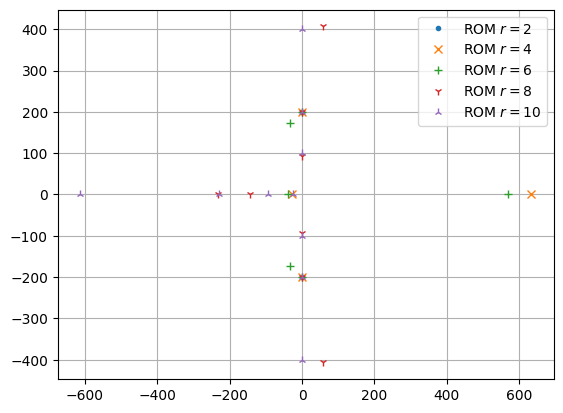

To check the stability of the ROMs, we can plot their poles and compute the maximum real part.

fig, ax = plt.subplots()

markers = '.x+12'

spectral_abscissas = []

for rom, marker in zip(roms_inf, markers):

poles = rom.poles()

spectral_abscissas.append(poles.real.max())

ax.plot(poles.real, poles.imag, marker, label=f'ROM $r = {rom.order}$')

_ = ax.legend()

print(f'Maximum real part: {max(spectral_abscissas)}')

Maximum real part: -9.324900122511472

We see that they are all asymptotically stable.

Interpolation at zero¶

The next option is using inverse Krylov subspaces (more commonly called the Padé approximation).

This will achieve interpolation of the first \(2 r\) moments at zero of the transfer function.

The moments at zero are

We can again use the arnoldi function

to compute \(V\) and \(W\).

from pymor.operators.constructions import InverseOperator

r_max = 5

v0 = fom.A.apply_inverse(fom.B.as_range_array())

V = arnoldi(InverseOperator(fom.A), InverseOperator(fom.E), v0, r_max)

w0 = fom.A.apply_inverse_adjoint(fom.C.as_source_array())

W = arnoldi(InverseOperator(fom.A.H), InverseOperator(fom.E.H), w0, r_max)

Then, in the same way, compute the Petrov-Galerkin projection…

pg = LTIPGReductor(fom, W, V)

roms_zero = [pg.reduce(i + 1) for i in range(r_max)]

…and draw the Bode plot.

fig, ax = plt.subplots(2, 1, **bode_plot_opts)

fom.transfer_function.bode_plot(w, ax=ax, label='FOM')

for rom in roms_zero:

rom.transfer_function.bode_plot(w, ax=ax, label=f'ROM $r = {rom.order}$')

_ = ax[0, 0].legend()

_ = ax[1, 0].legend()

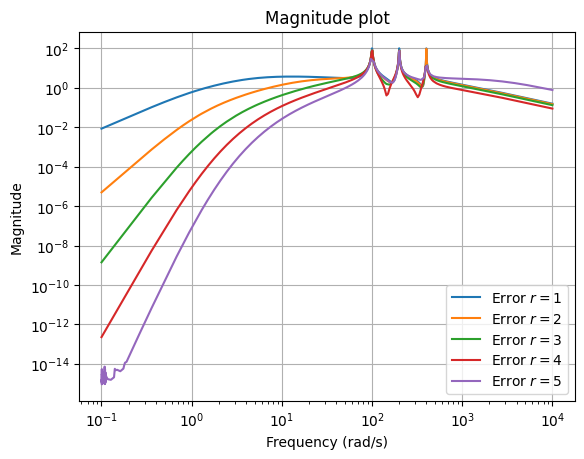

Now, because of interpolation at zero, we get good approximation for lower frequencies. The error plot shows this better.

fig, ax = plt.subplots()

for rom in roms_zero:

err = fom - rom

err.transfer_function.mag_plot(w, ax=ax, label=f'Error $r = {rom.order}$')

_ = ax.legend()

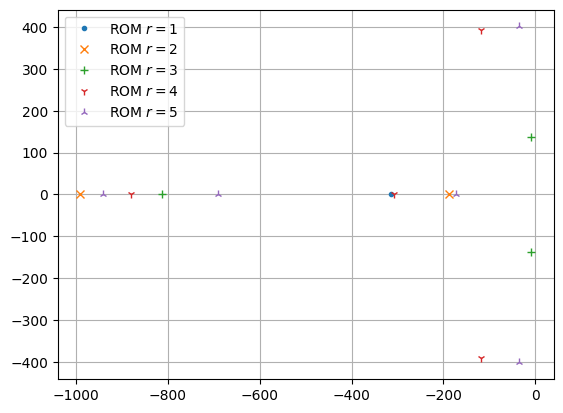

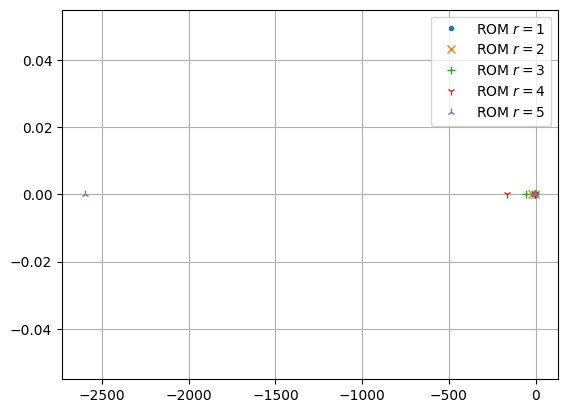

Checking stability using the poles of the ROMs.

fig, ax = plt.subplots()

spectral_abscissas = []

for rom, marker in zip(roms_zero, markers):

poles = rom.poles()

spectral_abscissas.append(poles.real.max())

ax.plot(poles.real, poles.imag, marker, label=f'ROM $r = {rom.order}$')

_ = ax.legend()

print(f'Maximum real part: {max(spectral_abscissas)}')

Maximum real part: -1.000002636038773

The ROMs are again all asymptotically stable.

Interpolation at an arbitrary finite point¶

A more general approach is using rational Krylov subspaces (also known as the shifted Padé approximation).

for some \(\sigma \in \mathbb{C}\) that is not an eigenvalue of \(A\).

This will achieve interpolation of the first \(2 r\) moments at \(\sigma\) of the transfer function.

The moments at \(\sigma\) are

To preserve realness in the ROMs, we interpolate at a conjugate pair of points (\(\pm 200 i\)).

from pymor.algorithms.gram_schmidt import gram_schmidt

s = 200j

r_max = 5

v0 = (s * fom.E - fom.A).apply_inverse(fom.B.as_range_array())

V_complex = arnoldi(InverseOperator(s * fom.E - fom.A), InverseOperator(fom.E), v0, r_max)

V = fom.A.source.empty(reserve=2 * r_max)

for v1, v2 in zip(V_complex.real, V_complex.imag):

V.append(v1)

V.append(v2)

_ = gram_schmidt(V, atol=0, rtol=0, copy=False)

w0 = (s * fom.E - fom.A).apply_inverse_adjoint(fom.C.as_source_array())

W_complex = arnoldi(InverseOperator(s * fom.E.H - fom.A.H), InverseOperator(fom.E), w0, r_max)

W = fom.A.source.empty(reserve=2 * r_max)

for w1, w2 in zip(W_complex.real, W_complex.imag):

W.append(w1)

W.append(w2)

_ = gram_schmidt(W, atol=0, rtol=0, copy=False)

We perform a Petrov-Galerkin projection.

pg = LTIPGReductor(fom, W, V)

roms_sigma = [pg.reduce(2 * (i + 1)) for i in range(r_max)]

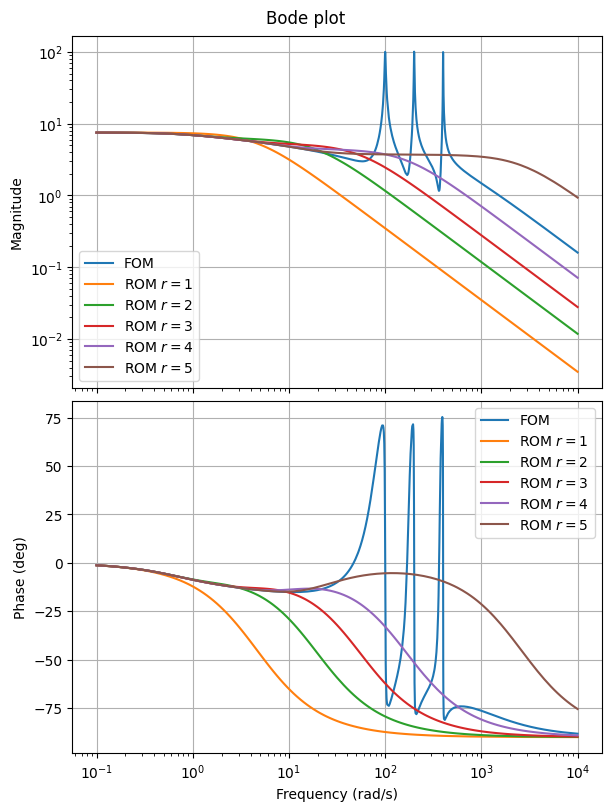

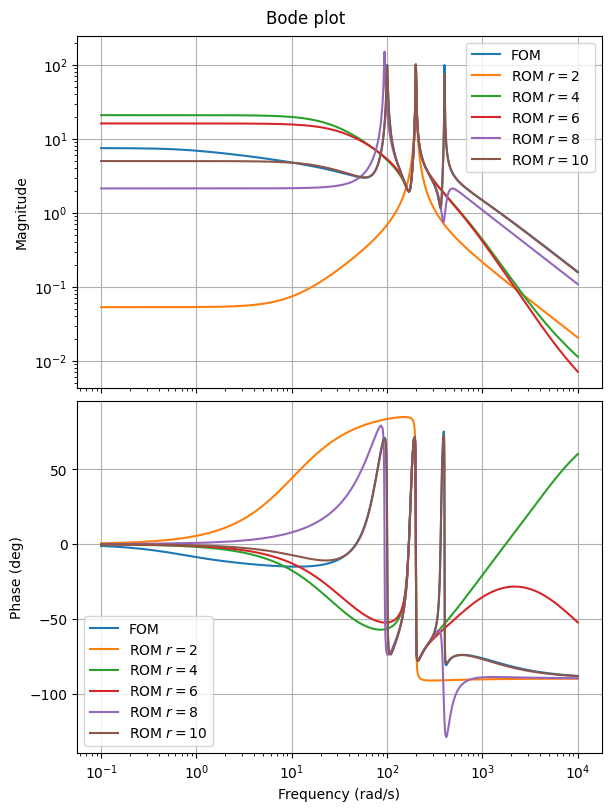

Then draw the Bode plots.

fig, ax = plt.subplots(2, 1, **bode_plot_opts)

fom.transfer_function.bode_plot(w, ax=ax, label='FOM')

for rom in roms_sigma:

rom.transfer_function.bode_plot(w, ax=ax, label=f'ROM $r = {rom.order}$')

_ = ax[0, 0].legend()

_ = ax[1, 0].legend()

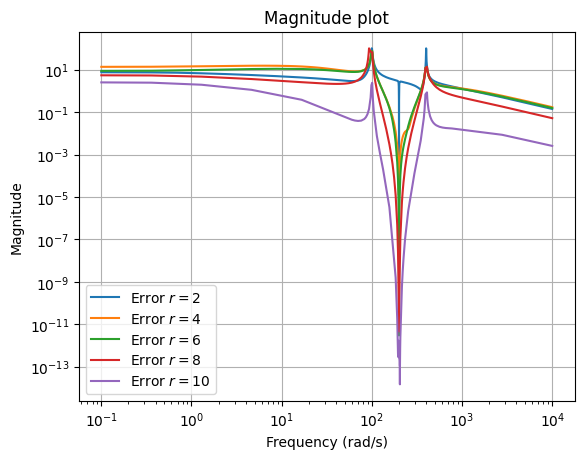

The ROMs are now more accurate around the interpolated frequency, which the error plot also shows.

fig, ax = plt.subplots()

for rom in roms_sigma:

err = fom - rom

err.transfer_function.mag_plot(w, ax=ax, label=f'Error $r = {rom.order}$')

_ = ax.legend()

The poles of the ROMs are as follows.

fig, ax = plt.subplots()

spectral_abscissas = []

for rom, marker in zip(roms_sigma, markers):

poles = rom.poles()

spectral_abscissas.append(poles.real.max())

ax.plot(poles.real, poles.imag, marker, label=f'ROM $r = {rom.order}$')

_ = ax.legend()

print(f'Maximum real part: {max(spectral_abscissas)}')

Maximum real part: 633.2912934294059

We observe that some of the ROMs are unstable. In particular, interpolation does not necessarily preserve stability, just as Petrov-Galerkin projection in general. A common approach then is using a Galerkin projection with \(W = V\) if \(A + A^T\) is negative definite. This preserves stability, but reduces the number of interpolated moments from \(2 r\) to \(r\).

Interpolation at multiple points¶

To achieve good approximation over a larger frequency range,

instead of local approximation given by higher-order interpolation at a single point,

one idea is to do interpolation at multiple points (sometimes called multipoint Padé),

whether of lower or higher-order.

pyMOR implements bitangential Hermite interpolation (BHI) for different types of Models,

i.e., methods to construct a reduced-order transfer function \(\widehat{H}\) such that

for given interpolation points \(\sigma_i\), right tangential directions \(b_i\), and left tangential directions \(c_i\), \(i = 1, \ldots, r\). Such interpolation is relevant for systems with multiple inputs and outputs, where interpolation of matrices

may be too restrictive. Here we focus on single-input single-output systems, where tangential and matrix interpolation are the same.

Bitangential Hermite interpolation is also relevant for \(\mathcal{H}_2\)-optimal model order reduction and methods such as IRKA (iterative rational Krylov algorithm), but we don’t discuss them in this tutorial.

Interpolation at multiple points can be achieved by concatenating different \(V\)

and \(W\) matrices discussed above.

In pyMOR, LTIBHIReductor can be used

directly to perform bitangential Hermite interpolation.

import numpy as np

from pymor.reductors.interpolation import LTIBHIReductor

bhi = LTIBHIReductor(fom)

freq = np.array([0.5, 5, 50, 500, 5000])

sigma = np.concatenate([1j * freq, -1j * freq])

b = np.ones((10, 1))

c = np.ones((10, 1))

rom_bhi = bhi.reduce(sigma, b, c)

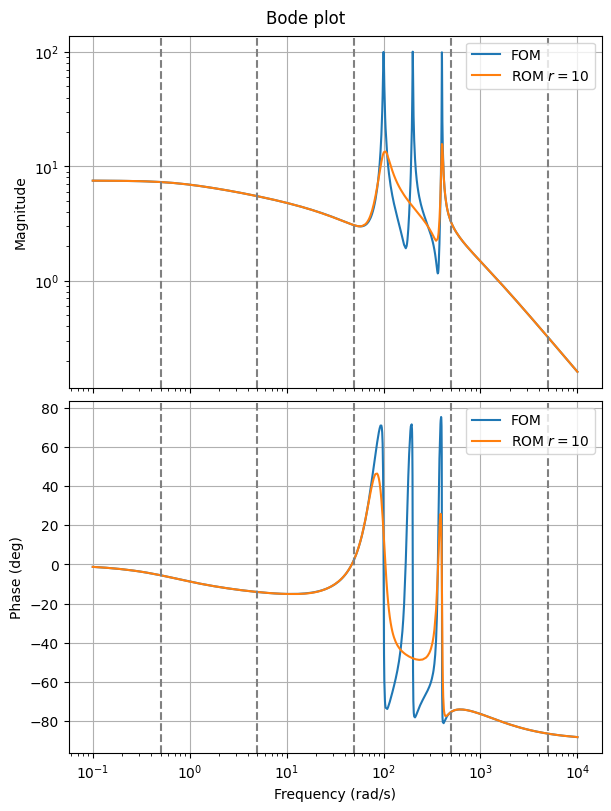

We can compare the Bode plots.

fig, ax = plt.subplots(2, 1, **bode_plot_opts)

fom.transfer_function.bode_plot(w, ax=ax, label='FOM')

rom_bhi.transfer_function.bode_plot(w, ax=ax, label=f'ROM $r = {rom_bhi.order}$')

for a in ax.flat:

for f in freq:

a.axvline(f, color='gray', linestyle='--')

_ = a.legend()

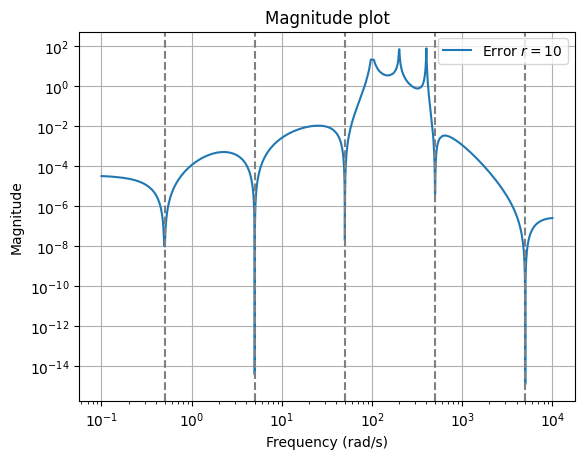

The error plot shows interpolation more clearly.

fig, ax = plt.subplots()

err = fom - rom_bhi

err.transfer_function.mag_plot(w, label=f'Error $r = {rom_bhi.order}$')

for f in freq:

ax.axvline(f, color='gray', linestyle='--')

_ = ax.legend()

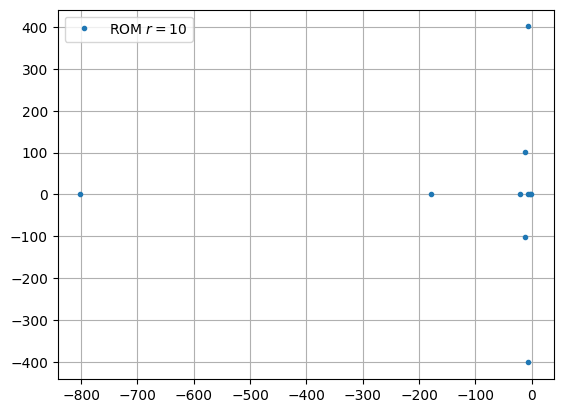

We can check stability of the system.

fig, ax = plt.subplots()

poles = rom_bhi.poles()

ax.plot(poles.real, poles.imag, '.', label=f'ROM $r = {rom.order}$')

_ = ax.legend()

print(f'Maximum real part: {poles.real.max()}')

Maximum real part: -1.0071270631815525

In this case, the ROM is asymptotically stable.

Download the code:

tutorial_interpolation.md,

tutorial_interpolation.ipynb.